84 · SPEC Kit 292

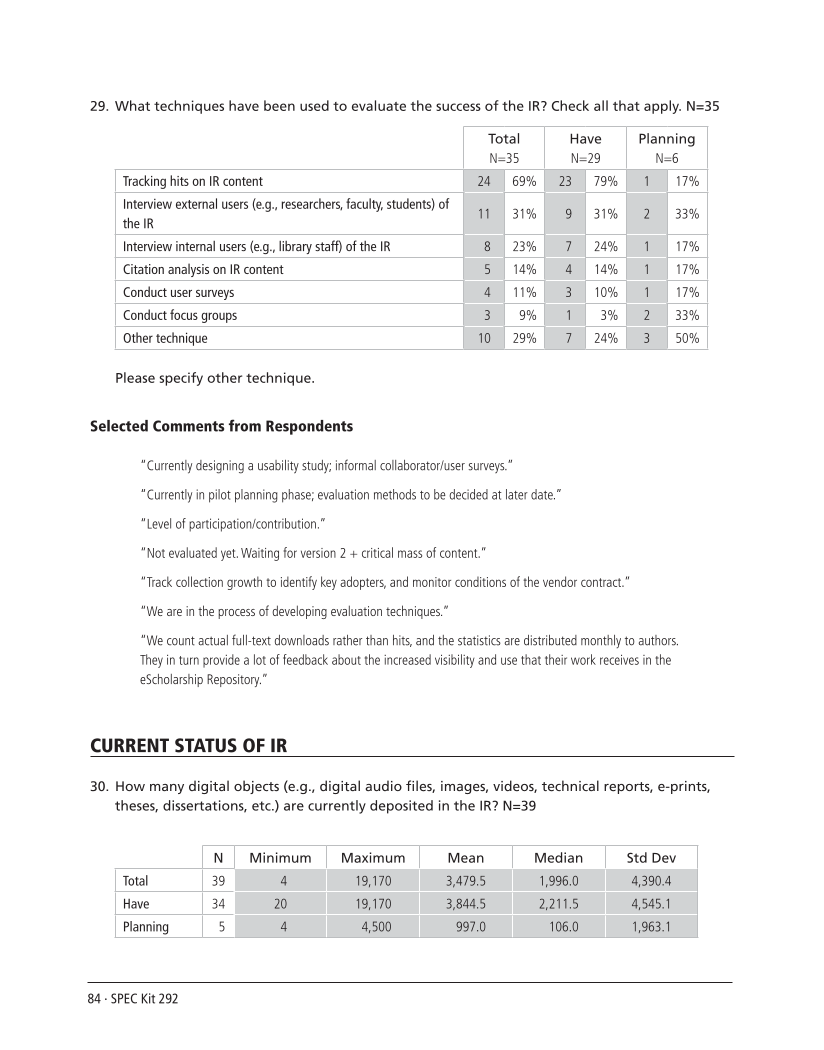

29. What techniques have been used to evaluate the success of the IR? Check all that apply. N=35

Total

N=35

Have

N=29

Planning

N=6

Tracking hits on IR content 24 69% 23 79% 1 17%

Interview external users (e.g., researchers, faculty, students) of

the IR

11 31% 9 31% 2 33%

Interview internal users (e.g., library staff) of the IR 8 23% 7 24% 1 17%

Citation analysis on IR content 5 14% 4 14% 1 17%

Conduct user surveys 4 11% 3 10% 1 17%

Conduct focus groups 3 9% 1 3% 2 33%

Other technique 10 29% 7 24% 3 50%

Please specify other technique.

Selected Comments from Respondents

“Currently designing a usability study informal collaborator/user surveys.”

“Currently in pilot planning phase evaluation methods to be decided at later date.”

“Level of participation/contribution.”

“Not evaluated yet. Waiting for version 2 +critical mass of content.”

“Track collection growth to identify key adopters, and monitor conditions of the vendor contract.”

“We are in the process of developing evaluation techniques.”

“We count actual full-text downloads rather than hits, and the statistics are distributed monthly to authors.

They in turn provide a lot of feedback about the increased visibility and use that their work receives in the

eScholarship Repository.”

Current Status of IR

30. How many digital objects (e.g., digital audio files, images, videos, technical reports, e-prints,

theses, dissertations, etc.) are currently deposited in the IR? N=39

N Minimum Maximum Mean Median Std Dev

Total 39 4 19,170 3,479.5 1,996.0 4,390.4

Have 34 20 19,170 3,844.5 2,211.5 4,545.1

Planning 5 4 4,500 997.0 106.0 1,963.1

29. What techniques have been used to evaluate the success of the IR? Check all that apply. N=35

Total

N=35

Have

N=29

Planning

N=6

Tracking hits on IR content 24 69% 23 79% 1 17%

Interview external users (e.g., researchers, faculty, students) of

the IR

11 31% 9 31% 2 33%

Interview internal users (e.g., library staff) of the IR 8 23% 7 24% 1 17%

Citation analysis on IR content 5 14% 4 14% 1 17%

Conduct user surveys 4 11% 3 10% 1 17%

Conduct focus groups 3 9% 1 3% 2 33%

Other technique 10 29% 7 24% 3 50%

Please specify other technique.

Selected Comments from Respondents

“Currently designing a usability study informal collaborator/user surveys.”

“Currently in pilot planning phase evaluation methods to be decided at later date.”

“Level of participation/contribution.”

“Not evaluated yet. Waiting for version 2 +critical mass of content.”

“Track collection growth to identify key adopters, and monitor conditions of the vendor contract.”

“We are in the process of developing evaluation techniques.”

“We count actual full-text downloads rather than hits, and the statistics are distributed monthly to authors.

They in turn provide a lot of feedback about the increased visibility and use that their work receives in the

eScholarship Repository.”

Current Status of IR

30. How many digital objects (e.g., digital audio files, images, videos, technical reports, e-prints,

theses, dissertations, etc.) are currently deposited in the IR? N=39

N Minimum Maximum Mean Median Std Dev

Total 39 4 19,170 3,479.5 1,996.0 4,390.4

Have 34 20 19,170 3,844.5 2,211.5 4,545.1

Planning 5 4 4,500 997.0 106.0 1,963.1